NOVA Wonders Can We Build a Brain?

Season 45 Episode 104 | 53m 42sVideo has Closed Captions

How does today's artificial intelligence actually work-and is it truly intelligent?

Artificially intelligent machines are taking over. They’re influencing our everyday lives in profound and often invisible ways. They can read handwriting, interpret emotions, play games, and even act as personal assistants. They are in our phones, our cars, our doctors’ offices, our banks, our web searches…the list goes on and is rapidly growing ever longer.

Problems playing video? | Closed Captioning Feedback

Problems playing video? | Closed Captioning Feedback

National corporate funding for NOVA Wonders is provided by Draper. Major funding for NOVA Wonders is provided by National Science Foundation, the Gordon and Betty Moore Foundation, the Alfred P....

NOVA Wonders Can We Build a Brain?

Season 45 Episode 104 | 53m 42sVideo has Closed Captions

Artificially intelligent machines are taking over. They’re influencing our everyday lives in profound and often invisible ways. They can read handwriting, interpret emotions, play games, and even act as personal assistants. They are in our phones, our cars, our doctors’ offices, our banks, our web searches…the list goes on and is rapidly growing ever longer.

Problems playing video? | Closed Captioning Feedback

How to Watch NOVA

NOVA is available to stream on pbs.org and the free PBS App, available on iPhone, Apple TV, Android TV, Android smartphones, Amazon Fire TV, Amazon Fire Tablet, Roku, Samsung Smart TV, and Vizio.

Buy Now

NOVA Labs

NOVA Labs is a free digital platform that engages teens and lifelong learners in games and interactives that foster authentic scientific exploration. Participants take part in real-world investigations by visualizing, analyzing, and playing with the same data that scientists use.Providing Support for PBS.org

Learn Moreabout PBS online sponsorshipTALITHIA WILLIAMS: What do you wonder about?

MAN: The unknown.

What our place in the universe is.

Artificial intelligence.

Hello.

Look at this, what's this?

Animals.

An egg.

Your brain.

Life on a faraway planet.

WILLIAMS: "NOVA Wonders" investigating the biggest mysteries.

We have no idea what's going on there.

These planets in the middle we think are in the habitable zone.

WILLIAMS: And making incredible discoveries.

WOMAN: Trying to understand their behavior, their life, everything that goes on here.

DAVID COX: Building an artificial intelligence is going to be the crowning achievement of humanity.

WILLIAMS: We're three scientists exploring the frontiers of human knowledge.

ANDRÉ FENTON: I'm a neuroscientist and I study the biology of memory.

RANA EL KALIOUBY: I'm a computer scientist and I build technology that can read human emotions.

WILLIAMS: And I'm a mathematician, using big data to understand our modern world.

And we're tacking the biggest questions...

Dark energy?

ALL: Dark energy?

WILLIAMS: Of life... MAN: There's all of these microbes, and we just don't know what they are.

WILLIAMS: And the cosmos.

♪ ♪ On this episode...

Artificial intelligence... ALI FARHADI: Machines that can learn by themselves.

WILLIAMS: How smart are they?

PAUL MOZUR: It can flirt, make jokes, identify pictures.

It has changed the whole field.

FEI-FEI LI: We've made such huge progress so fast.

GEOFF HINTON: And it's going to make life a lot better.

WILLIAMS: But could it go too far?

PETER SINGER: If we screw it up-- massive consequences.

"NOVA Wonders"-- "Can We Build A Brain?"

Major funding for "NOVA Wonders" is provided by... WILLIAMS: Inside a human brain there's about a hundred billion neurons.

And each one of them can connect to 10,000 others.

And from these connections comes... ALL: ...everything.

♪ ♪ (cars whooshing, sparks crackling, firework exploding) WILLIAMS: The human brain can compose symphonies, create beautiful works of art... EL KALIOUBY: It allows us to navigate our world, to probe the universe, and to invent technology that can do amazing things.

(spacecraft engines igniting) WILLIAMS: Now, some of that technology is aimed at replicating the brain that created it-- artificial intelligence, or A.I.

But has it even come close to what these babies can do?

FENTON: For ages, computers have done impressive stuff.

They crack codes, master chess, operate spacecraft.

But in the last few years, something has changed.

Suddenly, computers are doing things that can seem... much more human.

Today, computers can see, understand speech, even write poetry.

How is all this possible?

And how far will it go?

FENTON: Could we actually build a machine that's as smart as us?

WILLIAMS: One that can imagine, create, even learn on its own?

FENTON: How would a machine like that change society?

WILLIAMS: How would it change us?

I'm Rana El Kaliouby.

I'm André Fenton.

I'm Talithia Williams.

And in this episode "NOVA Wonders"-- "Can We Build A Brain?"

And if we could... should we?

♪ ♪ ♪ ♪ WILLIAMS: Many think the A.I.

revolution is happening not just in Silicon Valley, but here.

MOZUR: We're so used to America being the absolute primary center of the world when it comes to this stuff.

And now we're starting to see fully different ideas come out of China.

People are much more used to using their smart phones for everything.

WILLIAMS: In China, chat dominates daily life, even in its most intimate moments.

(keys tapping, beeping) ♪ ♪ (keys tapping, beeps) ♪ ♪ (keys tapping, beeping) WILLIAMS: These might seem like your typical conversations between friends, but they're not.

They're with this.

Meet Xiaoice-- or "Little Ice"-- a chatbot created by Microsoft.

LILI CHENG: A chatbot's just software that you can talk to.

A really bad example is when you call a company.

ROBOTIC VOICE: I'm sorry.

Press "5" to return to the main menu.

(glass cracking) WILLIAMS: But Xiaoice is in a whole other league.

(beeps) She's had over 30 billion conversations with over 100 million people.

(Xiaoice speaking Chinese dialect) She's even a national celebrity-- delivering the weather, appearing on TV shows, singing pop songs.

(singing) But the craziest thing is... HSIAO-WUEN HON: People cannot tell difference whether it's a bot or a real human.

WILLIAMS: You heard right; in fact, many of her users treat her no differently from a real friend.

(speaking Chinese dialect) (translated): Once I remember feeling really down and stressed out, and she kept consoling me.

She told me that actually life is beautiful and even sung me a song and said "I love you"-- I felt very touched.

(speaking Chinese dialect) (translated): For example, if you had a fight at work or your boss scolded you, you might be afraid to tell your friends since they might spread the story.

But with Xiaoice you don't have to worry.

To me, Xiaoice is a very good friend.

DI LI: So Xiaoice is a lot of people's best friend, including me.

Yeah.

MICHAEL BICKS: But hold it, she's not human.

What's the difference?

♪ ♪ WILLIAMS: Di Li is a senior engineer at Microsoft and one of Xiaoice's creators.

To him, she is much more than a piece of software.

(keys tapping, beeping) What does it mean when millions of people embrace a computer program as a friend?

Does this mean Xiaoice is "intelligent?"

Intelligent like us?

HON: Of course Xiaoice is intelligent.

Xiaoice can recognize your writing, can recognize your voice.

MOZUR: She can flirt.

She can make jokes.

She can identify pictures.

She can... you know, I mean, I think by all rights, you'd have to say that she is.

FENTON: Which brings us to the question-- what is intelligence anyway?

WILLIAMS: Traditionally, people in the field of A.I.

have thought of "intelligence" as the ability to do intelligent things, like play chess.

NEWSREEL NARRATOR: In the chess playing machine, a computer is programmed with the rules of the game... NEWSREEL NARRATOR 2: ...with 200 million possible moves every second.

But this kind of thinking only got us so far.

Checkmate.

We got supercomputers that can beat chess champions, model the weather, play "Jeopardy."

ALEX TREBEK: Watson?

WATSON: Who is Isaac Newton?

You are right!

WILLIAMS: They were each experts at specific tasks, but none could tell you what chess is, know that rain is wet, or why money is important to us-- they had no understanding of the world, no common sense.

(mechanisms whirring) GREG CORRADO: We thought just because computers were very good at math, that they would suddenly be very good at everything.

But it turns out that what a typical three-year-old could do drastically outstrips what any current artificial intelligence system can do.

COX: These things are eventually coming, we have a hard time predicting exactly when, but I think that building an artificial intelligence is actually going to be the crowning achievement of humanity.

Now wait, hold on a second.

Even if we decided we wanted to build a human-like intelligence, what makes us think we could?

Consider your brain.

Isn't there some sort of ineffable magic in there that makes me me, and you you?

I don't think so.

Based on what we've learned from neuroscience, I think that fundamentally every thought you've ever had, every memory, even every feeling is actually the flicker of thousands of neurons in your brain.

We are biological machines.

Now for some people, that might sound depressing, but think about it: how does this make this?

♪ ♪ (fireworks crackling) WILLIAMS: Somehow, these crackling connections between brain cells produce thoughts and an understanding of our world.

The question is how.

For the last 60 years, computer scientists have believed if we could just figure that out, we could build a new breed of machine-- one that thinks like us.

So where would you start?

(birds chirping) If you really want to build intelligent machines, I believe that vision is a huge part of it.

WILLIAMS: Fei-Fei Li's mission is to teach computers to see.

LI: Vision is the main tool we use to understand the world.

(alarm clock ringing) (ringing stops) WILLIAMS: A world so complex, we rarely stop to think how much our eyes and brain process for us-- all in a matter of milliseconds.

YANN LECUN: We take vision for granted as human because we don't consider this as a particularly intelligent task.

But, in fact, it is.

It takes up about a quarter to a third of our entire brain to be able to do vision.

WILLIAMS: That's not to say vision is intelligence, but it's hard to appreciate just how complex a task it is, until you try to get a computer to do it.

(bell jingling) Take recognizing pictures of cats, for instance.

(meowing) LI: So you think it'll be easy for a computer to recognize a cat, right?

A cat is a simple animal with round face, two pointy ears... CORRADO: A traditional programming approach to identifying a cat would be that you would build parts of the program to accomplish very specific tasks, like recognizing cat ears, fur, or a cat's nose.

LECUN: But what if the cat is in this, kind of a funny position or you don't see the cat's face, you see it from the back or the side.

They can be sleeping, they can be lying down... Cats come in different shapes, they come in different colors.

They come in different sizes.

KRISHNA: Running around, attempting a jump... JOHNSON: ...curled up in a little ball, headfirst stuffed into a shoe.

You just cannot imagine how to write a program to take care of all those conditions.

♪ ♪ WILLIAMS: But that is exactly what Fei-Fei set out to do-- figure out how to get computers to recognize not just cats, but any object.

She started not by writing code, but by looking at kids.

LI: Babies from the minute they're born are continuously receiving information.

Their eyes make about five movements per second, and that translates to five pictures, and by age three, it translates to hundreds of millions of pictures you've seen.

WILLIAMS: She figured that if a child learns by seeing millions of images, a computer would have to do the same.

But there was a hitch... ALL: Data.

KRISHNA: We started realizing that one of the biggest limitations to being able to train machines to identify objects is to actually collect a dataset of a large number of objects.

WILLIAMS: And a little thing called the internet would help solve that problem.

Let's take a second here to talk data.

All those cat videos, Facebook posts, selfies and tweets.

Turns out we create a ton of it.

In fact every day, our collective digital footprint adds up to 2.5 billion gigabytes of new data-- that's the same amount of information in 530 million songs, 250,000 Libraries of Congress, 90 years of HD video, and that's each and every day.

But how to make sense of it all?

COX: The real trick of that isn't just that it needs tons and tons of data.

It needs tons and tons of labeled data.

WILLIAMS: Computers don't know what they're looking at-- someone would have to label all that data.

Here's where Fei-Fei had an idea: We crowdsourced, crowdsourced, crowdsourced, crowdsourced.

WILLIAMS: She crowdsourced the problem.

Paying people pennies a picture, she recruited thousands of people from across the globe to label over ten million images, creating the world's largest visual database-- ImageNet.

Now suddenly we have a dataset of millions and tens of millions.

WILLIAMS: Next, she set up an annual competition to see who could get a computer to recognize those images.

This was very exciting because a lot of schools from around the world started competing to identify thousands of categories of different types of objects.

WILLIAMS: At first, computers got better and better, until they didn't.

JOHNSON: Performance just sort of stalled and there were not really any major new ideas coming out.

WILLIAMS: The computers were still making bone-headed mistakes.

LI: We were still struggling to label objects.

There were questions about why are you doing this.

WILLIAMS: But then, in the third year of the contest, something changed.

One team showed up and blew the competition away.

The leader of the winning team was Geoff Hinton.

HINTON: The person who evaluated the submissions had to run our system three different times before he really believed the answer.

He thought he must have made a mistake because it was so much better than the other systems.

The change in performance on ImageNet was tremendous.

So until 2012 the error rate was 26%.

When Geoff Hinton participated, they got 15%.

The year after that it was 6%, and then the year after that it was 5%.

Now it's 3%, and it's basically reached human performance.

WILLIAMS: For the first time, the world had a machine that could recognize tens of thousands of objects-- Irish setter, skyscraper, mallard, baseball bat-- as well as we do.

Now, this huge jump got everyone really excited.

WILLIAMS: So how did Geoff and his team do it?

HINTON: The most intelligent thing we know is the brain, so let's try and build A.I.

by mimicking the way the brain does it.

WILLIAMS: As it happens, he used a kind of program first invented decades before, but that had long ago fallen out of favor, dismissed as a dead end.

The majority opinion within A.I.

was that this stuff was crazy.

WILLIAMS: It's called "neural networks," or deep learning, and since sweeping ImageNet, it's been taking the field by storm.

EL KALIOUBY: So how did they do it?

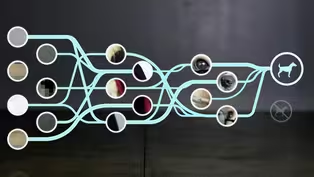

How does deep learning actually work?

Let's break it down, with a little help from man's best friend.

Now when you or I look at this creature... (barks) we know it's a dog.

But when a computer sees him, all it sees is this.

How do I get a computer to recognize that this photo, or this one, or that one, is a photo of a dog?

It turns out that the only reliable way to solve this problem is to give the computer lots of examples and have it figure out on its own, the average, the numbers, that really represent a dog.

Here's where deep learning comes in.

As you might recall, it's a program based on the way your brain works, and it looks something like this: here we have layers of sensors, or nodes.

Each feeds information in one direction from input to output.

The input layer is kind of like your retina, the part of your eye that senses light and color.

In the case of this photo of Buddy, it senses dark over there, light over here.

This information gets fed to the next layer, which can recognize basic features like edges.

That then goes to the next layer, which recognizes more complex features like shapes.

Finally, based on all of this, the output layer labels the image as either "dog"... (dog barks) or "not dog."

(buzzer sounds) But here's the kicker-- and this is what's revolutionary about deep learning and neural networks.

At first, the computer has no idea what it's looking at.

It just responds randomly.

But each time it gets a wrong answer-- Information flows backwards through the network saying, "You got the answer wrong," so anybody who was supporting that answer, your connection strength should get a bit weaker.

EL KALIOUBY: And anybody who was supporting the right answer?

Their connections get stronger.

Back and forth, it does this over and over again until, thousands of images later, the computer teaches itself the features that define "dogginess."

The magic of it is that the system learns by itself, it figures out how to represent the visual world.

WILLIAMS: But teaching computers to see, as it turns out, was only the beginning.

It's been a paradigm shift.

It was a paradigm shift.

I think deep learning is a paradigm shift.

WILLIAMS: Suddenly, with deep learning, anything seemed possible.

Around the world, A.I.

labs raced to put neural networks into everything.

But it wouldn't be news to the rest of the world until one day in March 2016, in Seoul, South Korea...

When world champion Lee Sedol steps onto the stage to challenge a machine in the game of Go.

(cameras clicking) ANNOUNCER: Starting tomorrow in South Korea a human champion will square off against a computer.

WILLIAMS: You might not know what Go is, but too much of the planet, it's bigger than football.

REPORTER: All right, folks, you're going to see history made.

Stay with us.

MATT BOTVINICK: I believe it was beyond the Super Bowl.

I mean, just millions and millions of people.

WILLIAMS: In fact, nearly 300 million people watched these matches.

BOTVINICK: This game is hugely popular in Asia.

The game goes back, I think, thousands of years, it's deeply connected with the culture.

People who play this game don't view it as an analytical, quasi-mathematical exercise.

They view it almost as poetry.

WILLIAMS: It's a board game, like chess, that demands a high level of strategy and intellect.

The goal is to surround your opponent's stones to capture as much area of the board as possible.

Players receive points for the number of spaces and pieces captured.

It might sound simple, but... BOTVINICK: The number of possible board positions in Go is larger than the number of molecules in the universe.

It's just not going to work to exhaustively search everything that could happen.

So what you need are these gut feelings.

(Go stones jingling) HINTON: That's intuition.

That's the kind of thing computers can't do.

(indistinct chatter) WILLIAMS: Not according to these guys at Google's DeepMind in London.

They knew in Go no machine could ever win with brute force.

CORRADO: And it was only by bringing deep learning in particular to this area that we were able to build artificial systems that were able to "see" patterns on the board in the same way that humans see patterns on the board.

WILLIAMS: Using deep learning, DeepMind's AlphaGo analyzed thousands of human games and played itself millions of times, allowing it to invent entirely new ways to play the game.

COMMENTATOR: I think black's ahead at this point.

LECUN: AlphaGo was really a stunning result.

It's very humbling for humanity.

(laughs) COMMENTATOR: I think he resigned.

WILLIAMS: A loss heard 'round the world.

REPORTER: A clash of man against machine is over, and the machine won.

REPORTER 2: A victory over a human by a machine... BOTVINICK: To see a machine play the game at a high level with moves that feel creative and poetic I think was a bit of a game changer.

KRISHNA: All of a sudden, it has changed the whole field.

(laughter) WILLIAMS: And it's not just winning at Go.

In the past few years, deep learning has invaded our everyday lives-- without most of us even knowing it.

ETZIONI: Deep learning is a big deal because of the results.

There're just little things or big things that we can do that we couldn't do before.

WILLIAMS: It's what allows smart devices like Alexa to understand you.

MAN: Alexa, how many feet in a mile?

ALEXA: One mile equals 5,280 feet.

WILLIAMS: It's what taught Xiaoice how to chat, and Facebook to pick you out of a crowd at your cousin's wedding.

CHRISTOF KOCH: We've suddenly broken through a wall.

When I started in this field, none of that was possible.

Now today you have machines that can effortless, in real time, recognize people, know where they're looking at.

So there has been breakthrough after breakthrough.

WILLIAMS: Now it's bested humans in many tasks.

Lip Net can read your lips at 93% accuracy-- that's nearly double an expert lip reader.

Google Translate can "read" foreign languages in real time.

Hey Isabel, how's it going?

VOICE FROM PHONE: Hey Isabel, (speaking foreign language).

WILLIAMS: Even translate live speech.

(speaking foreign language) VOICE ON PHONE: Absolutely okay, thank you.

WILLIAMS: Deep learning programs have composed music, painted pictures, written poetry.

It's even sent Boston Dynamics's robot head over heels.

HINTON: For the foreseeable future, which I think is about five years, what we'll see is this deep learning invading lots and lots of different areas, and it's going to make life a lot better.

WILLIAMS: At least that's the hope-- just consider medicine.

LECUN: Deep learning systems are very good at identifying tumors in images, skin conditions, you know, things like that.

WILLIAMS: One of the first attempts with real patients was conducted by Dr.

Rob Novoa, a dermatologist at Stanford's Medical School.

He knew nothing about deep learning until... NOVOA: I came across the fact that algorithms could now classify hundreds of dog breeds as well as humans.

And when I saw this I thought, my God, if it can do this for dog breeds, it can probably do this for skin cancer as well.

♪ ♪ So we gathered a database of nearly 130,000 images from the internet, and these images had labels of melanoma, skin cancer, benign mole, and using those we began training our algorithms.

WILLIAMS: The next step was to see how it stacked up against human doctors.

NOVOA: The algorithms did as well as or better than our sample of dermatologists who were from academic practices in California and all over the country.

WILLIAMS: And all this can be put on a phone.

NOVOA: Give it a moment and it accurately classified it as a benign.... NOVOA: Technology has always changed the way we practice medicine, and will continue to do so, but I'm skeptical as to its ability to completely eliminate entire fields.

It will change them, but it won't eliminate them.

WILLIAMS: Rather than replace doctors, Rob thinks this will expand access to care.

NOVOA: In the future, a primary care doctor or nurse practitioner in a rural setting would be able to take a picture of this, and be able to more accurately diagnose what's going on with it.

WILLIAMS: So deep learning has given us machines that can see... hear... ...speak.

ALEXA: It might rain in Aliquippa tomorrow.

WILLIAMS: But to build an intelligence like ours, you're going to need a lot more.

EL KALIOUBY: Our devices know who we are, they know where we are, they know what we're doing, they have a sense of our calendar but they have no idea how we're feeling.

It's completely oblivious to whether you're having a good day, a bad day, are you stressed, are you upset, are you lonely?

WILLIAMS: In other words, our machines have no emotional intelligence.

(loud smash) And that's important-- our host, Rana el Kaliouby, would know-- she's devoted her career to solve just that.

It all started back when she was a grad student from Egypt at the University of Cambridge.

EL KALIOUBY: There was one day when I was at the computer lab and I was actually literally in tears because I was that homesick.

And I was chatting with my husband at the time and the only way I could tell him that I was really upset was to basically type, you know, "I'm crying."

And that was when I realized that, you know, all of these emotions that we have as humans, they're basically lost in cyberspace, and-and I felt we could do better.

WILLIAMS: But to do better how?

Rana's next stop was MIT, where she continued work on a new algorithm, one that could pick up on the important features of human behavior-- that tell you whether you're feeling happy, sad, angry, scared, you name it.

EL KALIOUBY: It's in your facial expressions, it's in your tone of voice, it's in your, like, very nuanced kind of gestural cues.

WILLIAMS: Because she thinks this could transform the way we interact with technology-- our cars could alert us if we get sleepy... (beeping) our phones could tell us whether that text really was a joke, our computers could tell if those web ads are wasting their time.

But where to start?

She decided to go with the most emotive part of the human body.

The way our face works is basically we have about 45 facial muscles.

So, for example, the zygomaticus muscle is the one we use to smile.

So you take all these muscle combinations, and you map them to an expression of emotion like anger or disgust or excitement.

The way you then train an algorithm to do that, is you feed it tens of thousands of examples of people doing each of these expressions.

WILLIAMS: At first her algorithm could only recognize three expressions, but it was enough to push her to take a leap.

EL KALIOUBY: I remember very clearly my dad was like, "What?

"You're leaving MIT to run a company?

Like, why would you ever do that?"

In fact, the first couple of years, I kept the startup a secret from my family.

WILLIAMS: Eventually, Rana would convince her parents, but convincing investors was a whole other story.

EL KALIOUBY: It is very unusual, especially for women coming from the Middle East to be in technology and to be leaders.

I remember this one time when I was supposed to be presenting to an audience and I walked into the room and people assumed I was the coffee lady.

WILLIAMS: And investors were not the hardest to convince.

All these doubts in my mind like are probably shaped by my upbringing, right, where women don't lead companies, and maybe I should be back home with my husband.

I think I've learned over the years to have a voice and use my voice and believe in myself.

WILLIAMS: And once she did that... We have this golden opportunity to reimagine how, you know, how we connect with machines and, therefore, as humans how we connect with one other.

WILLIAMS: Today, Rana's company-- called Affectiva-- has raised millions and has a deep learning algorithm that can recognize 20 different facial expressions.

(beeps) Many of her clients are marketing companies who want to know whether their ads are working, and she's also developing software for automotive safety, but an application she's especially proud of is this.

NED SAHIN: Most autistic children struggle with the basic communication skills that you and I take for granted.

WILLIAMS: A collaboration with neuroscientist Ned Sahin and his company Brain Power that allows autistic children to read the emotions in people's faces.

Imagine that we have technology that can sense and understand emotion and that becomes like an emotion hearing aid, that can help these individuals understand in real time how other people are feeling.

I think that that's a great example of how A.I., and emotion A.I.

in particular, can really transform these people's lives in a way that wasn't possible before this kind of technology.

♪ ♪ No doubt deep learning has accomplished a lot.

But how far will it go?

Will it ever lead to the so-called "holy grail" of A.I.-- a general intelligence like ours?

No, very unlikely, because it has challenges-- it's difficult to generalize, it's difficult to abstract, if the system meets something it's never encountered before, the system can't reason about it.

This is the problem of deep learning-- in fact, is the problem of A.I.

in general today-- is that we have a lot of systems that can do one thing well.

ETZIONI: My best analogy to deep learning is we just got a power drill, and boy can you do amazing things with a power drill.

But if you're trying to build a house, you need a lot more than a power drill.

Which makes you wonder-- will we ever get there?

Can we ever build an intelligence that rivals our own?

I think we're a long way off from human-level intelligence.

There's been this sort of trend in A.I.

maybe for the past 50 years of thinking that if only we could build a computer to solve this problem, then that computer must be generally intelligent and it must mean that we're just around the corner from having A.I.

WILLIAMS: Okay, so if it's not deep learning-- how?

FARHADI: What we need to do is we build machines that can learn in the world by themselves.

WILLIAMS: Like the way we do.

Humans are not born with a set of programs about how the world works.

Instead, with every blink... bang... and bruise, we acquire that knowledge by interacting with the environment.

♪ ♪ By the time we walk, we've developed a crucial skill we take for granted but is impossible to teach computers: common sense.

(laughter) I cannot leave an apple in the middle of the air.

It will drop.

If I push something toward the edge of the table, probably it's going to fall off the table.

If I throw something at you like that, you know that it's going to be projectile kind of movement.

(splats) All of those things are examples of things that are just so simple for human brain, but these problems are insanely difficult for computers.

WILLIAMS: Ali Farhadi wants computers to solve these problems for themselves.

But the real world is complicated, so he starts simple-- with a virtual environment.

We put an agent in this environment.

We wanted to teach the agent to navigate through this environment by just doing a lot of random movement.

WILLIAMS: At first it knows nothing about the rules that govern the world.

Like if you want to get to the window, you can't go through the couch.

FARHADI: The whole point is that we didn't explicitly mention any of these things to the robot and we wanted the robot to learn about all of these by just exploring the world.

WILLIAMS: For the robot it's a game.

Its goal: to get to the window, and each time it bumps in the couch it loses a point.

Eventually... FARHADI: By doing lots of trial and error, the agent learns what are the things that I should do to increase my reward and decrease my penalties.

Over the course of millions of iterations, the robot would actually develop common sense.

WILLIAMS: But that's just the first step.

(beeping) You can actually get this knowledge that this agent learned in this synthetic environment, move it to an actual robot and put that robot in any room and that robot should be able to operate in that room.

WILLIAMS: This robot has never been in this room.

Think of it as a toddler made of metal and plastic.

ROOZBEH MOTTAGHI: This is a big deal because the robot wakes up in a completely unknown environment.

So it needs to basically match what it as has seen before in the virtual environment, with what it sees now in the real environment.

WILLIAMS: Its goal sounds ridiculously simple.

ERIC KOLVE: So now the robot is searching for where it might find the tissue box.

FARHADI: What makes this hard for this specific one is that the tissue box is not even in the frame right now.

It has to move around to find this little box.

KOLVE: It's going to scan the room left and right until it can latch onto something that gives it some indication of where it is and then move forward towards it.

FARHADI: I think it got it now.

WILLIAMS: If after 60 years of trying, this is state-of-the-art, that probably says something about the state of A.I.

RODNEY BROOKS: When we look around today, at things in A.I., we can see little pieces of lots of humanity, but they're all very fragile.

So I think we're just a long, long way from understanding how intelligence works yet.

There is a huge gap between where we are and what we need to do to build this general unified intelligent agent that can act in the real world.

Ultimately, ideally one day we'll be there.

But we are really far from that point.

LECUN: Before we reach human-level intelligence in all the areas that humans are good at, it's going to take significant progress and not just technological progress, but scientific progress.

WILLIAMS: If A.I.

is ever going to get there, many think it will have to go beyond neural nets and model even more closely how the actual brain works.

COX: If we're going to really get down to the sort of core algorithms of how we want to teach machines how to learn, I think we're going to have to actually open up the box and look inside and figure out how things really work.

WILLIAMS: One example of this approach is called neuromorphic computing.

Instead of writing software like deep learning, scientists like Dharmendra Modha draw direct inspiration from the brain to build new kinds of hardware.

MODHA: The goal of brain-inspired computing is to bridge the gap between the brain and today's computers.

WILLIAMS: You might not realize it, but compared to your brain, computer hardware today requires vast amounts of energy.

Consider DeepMind's AlphaGo, the machine that beat Lee Sedol at Go.

ETZIONI: Just think about these two machines-- the AlphaGo hardware and the human brain.

The human brain, right, it's sitting right here.

It's tiny, it's powered by let's say 60 watts and a burrito.

AlphaGo is a cavernous beast, even in this day and age, you know, thousands of processing units and a huge amount of electricity and energy and so on.

WILLIAMS: In fact, DeepMind used 13 data server centers and just over 1 megawatt to power AlphaGo.

That's 50,000 times more energy than Lee Sedol's brain.

(Go stones clattering) MODHA: The human brain is three pounds of meat, 80% water.

Occupies the size of a two-liter bottle of soda, consumes the power of a dim light bulb and yet is capable of amazing feats of sensation, perception, action, cognition, emotion, and interaction.

WILLIAMS: So why is the brain so much more efficient?

Engineers have pinned down a few clues.

For one, traditional computers work by constantly shuttling data from memory-- where it's stored-- to the CPU, where it's crunched.

This constant back-and-forth eats up a lot of juice.

Today's computers fundamentally separate computation from memory which is highly inefficient, whereas our chips, like the brain, combine computation, memory, and communication.

♪ ♪ WILLIAMS: The chip is called TrueNorth, and its architecture combines memory and computation.

For certain applications, this design uses a hundred times less energy than a traditional computer.

And it's worth pondering the consequences-- funded by the Defense Department, the Army and the Air Force are already testing the chip to see if it can help drones identify threats and pilots make split-second targeting decisions.

Until now, the only possible way to do that was with banks of computers thousands of miles away from the battlefield.

MODHA: That's amazing because of the low power in the real-time response of TrueNorth allows this decision-making to happen without having to wait for a long time.

WILLIAMS: Of course, many fear technologies like this will eventually take human intelligence out of the loop.

COX: We're going to increasingly be giving over our decision-making ability to machines, and that's going to range from everything from, you know, how does the steering wheel turn in the car if somebody walks out into the road, to should a military drone target a person and fire.

(explosions) And handing those decisions over to the machines?

Well, that's a nightmare familiar to anyone who's seen the movies.

NATHAN: Ava, I said stop!

Whoa, whoa, whoa... (grunts) HAL 9000: I'm sorry, Dave, I'm afraid I can't do that.

WILLIAMS: If you're worried, you'd have good company.

Big thinkers like the late Stephen Hawking, Bill Gates.

and Elon Musk have all made headlines warning about the dangers of A.I.

A.I.

is a fundamental existential risk for human civilization.

WILLIAMS: It's a burning question for many of us-- are we just sitting ducks for the arrival of the robot overlords?

(Bleeping), that's so off the mark.

My immediate subconscious reaction is I laugh.

I want to challenge Elon Musk.

Show me a program that could even take a fourth grade science test.

Reality seems to paint a different picture entirely, one where achieving an intelligence like ours-- never mind one that would want to kill us-- is far away.

Instead, potential threats from A.I.

might be much more mundane.

Think about it.

Without so much as a blink, we've surrendered control to systems we do not understand.

Planes virtually pilot themselves, algorithms determine who gets a loan and what you see in your news feed.

Machines run world markets.

Today's A.I.

would seem to hold tremendous promise and peril.

♪ ♪ Just consider self-driving cars.

PETER RANDER: Self-driving cars are one of the really first big opportunities to see A.I.

get into the physical world.

This physical interaction with the world with intelligence behind it, it's-it's huge.

COX: You're talking about having an actual object going out into the world, interacting with other agents.

It has to interact with people, pedestrians, cyclists.

Has to deal with different road conditions.

WILLIAMS: They're also a pretty good litmus test for reality versus hype.

There seems to be a tremendous PR war going on, who can make the most outrageous claims.

It makes it hard to sort through, then, what's real and what's smoke and mirrors.

WILLIAMS: A glance online would make it appear as if self-driving cars are right around the corner when, in fact, it'll likely be decades before one is in your driveway.

ETZIONI: So every year there are gonna be self-driving cars with more abilities.

But it's gonna be a really long time before the car can completely take over and you can take a nap.

♪ ♪ WILLIAMS: For one, almost under all conditions, they still need a safety driver.

This one belongs to Argo-- the center of Ford's self-driving efforts.

BRETT BROWNING: Lisa here's got her hands in a position where she can really have a very fast reaction time to take over from the car.

This allows us to have a very short leash on the system.

♪ ♪ WILLIAMS: Even after logging millions of miles, the only places you can find truly autonomous vehicles today are either on test tracks or carefully chosen routes that have been meticulously mapped.

And even under those conditions, neither Argo nor its competitors can reliably drive in the snow or rain.

Nonetheless, many engineers are confident that these problems will eventually be solved.

The question is when.

We can debate five years, ten years, 20 years.

But absolutely there's a future in which most cars are self-driving.

BROOKS: If we go out far enough, we won't have any human drivers, ultimately, but it's a lot further off than I think a lot of the Silicon Valley startups and some of the car companies think.

WILLIAMS: And if that day comes, there could be a huge upside.

KOCH: Dramatic reduction in traffic density, because we don't need as many cars if the cars are being used all the time.

Old people won't have to give up their driver's license, we won't have drunk driving.

(siren blaring) RANDER: About 40,000 people died in the U.S. last year in auto accidents.

And that number is huge, and it's a million worldwide.

WILLIAMS: The vast majority of which are due to human error.

In fact, car crashes are a leading cause of death (car honking) in the U.S. On the other hand, taking us out of the equation raises some big ethical questions.

A woman was hit and killed by a driverless Uber vehicle in Tempe, Arizona, last night.

WILLIAMS: This accident was big news.

It was the first of its kind, but it almost certainly won't be the last.

SINGER: When the machine makes the wrong decision, how do we figure out who's to be held responsible?

What you have is a series of questions that our laws are really not all that ready for.

WILLIAMS: And then there's the issue of jobs.

At the moment, these vehicles are so expensive they only make sense for companies that have fleets that could be used 24/7.

FORD: So the early adopters won't be the individual customers, it'll be big fleets.

WILLIAMS: Like trucks.

Because they mostly run on predictable highway routes, they might be the first self-driving vehicles you'll see in the next lane.

COX: We're at the point where highway driving in a truck with an autonomous vehicle will be solved in the next five, ten years, so those are all jobs that are going to go away.

FORD: There will be economic disruption.

If you think of things like truck drivers, taxi drivers, Uber and Lyft drivers, we need to have this discussion as a society and how are we going to prepare for this.

WILLIAMS: And what if those 3.5 million truck drivers in the U.S. are just the canary in the coal mine?

DOMINGOS: We have learned a certain number of things, you know, in the last 50 years of A.I.

and we understand that, like on the ranking of things to worry about, Skynet coming and taking over doesn't even rank in the top ten.

It distracts attention from the more urgent things like, for example, what's going to happen to jobs.

♪ ♪ WILLIAMS: For a glimpse into the future, consider one of the largest companies on the planet-- Amazon.

Whether you're aware of it or not, that pair of socks you ordered last week comes from a place like this.

TYE BRADY: Amazon has tremendous scale.

We have fulfillment centers that are as large as 1.25 million square feet.

That's like 23 football fields, and in it we'll have just millions of products.

WILLIAMS: To deal with that scale, Amazon has built an army of robots.

BRADY: Like a marching army of ants that can constantly change its goals based on the situation at hand, right.

So, our robotics are very adaptive and reactive in order to extend human capability to allow for more efficiencies within our own buildings.

WILLIAMS: And there's plenty more where those came from.

Every day, this facility in Boston "graduates" a new batch of machines.

BRADY: All of the robots that you see that are moving the pods have been built right here in Boston.

I call it the nursery, where the robots are born.

They'll be built, they'll take their first breath of air, they'll do their own diagnostics.

Once they're good, then they'll line up for robot graduation, and then they will swing their tassels to the appropriate side, drive themselves right onto a pallet, and go direct to a fulfillment center.

WILLIAMS: To some of us, this moment belies a dark sign of what's to come: a future that doesn't need us, one where all jobs-- not just cab drivers and truckers-- are taken by machines.

But Amazon's chief roboticist doesn't see it that way.

BRADY: The fact is really plain and simple: the more robots we add to our fulfillment centers, the more jobs we are creating.

The robots do not build themselves.

Humans design them, humans build them, humans deploy them, humans support them.

And then humans, most importantly, interact with the robots.

When you look at that, this enables growth.

And growth does enable jobs.

WILLIAMS: Certainly, history would seem to bear him out.

Since the Industrial Revolution, new technologies-- while displacing some jobs-- have created new ones.

LECUN: There's nothing special about A.I.

compared to, say, tractors or telephone or the internet or the airplane.

Every single technology that was deployed displaced jobs.

WILLIAMS: And the new jobs workers took, more often than not, raised wages and the standard of living for everyone.

DOMINGOS: 200 years ago, 98% of Americans were farmers.

98% of us are not unemployed now.

We're just doing jobs that were completely unimaginable back then, like web app developer.

SEBASTIAN THRUN: I'd argue as we invent new things, it lifts the plate for everybody.

Let's take inventions in the last 100 years that matter-- television, telephones, penicillin, modern healthcare.

I believe that the ability to invent new things lifts us all up as a society.

WILLIAMS: While this is the predominant view in the A.I.

community, some think it ignores the reality of today's world.

SINGER: There's a long history of technology creators assuming that only good things would happen with their baby when it went out into the world.

Even if there are some new jobs created somewhere, the vast majority of people are not easily going to be able to shift into them.

That truck driver who loses their job to a driverless truck isn't going to easily become an app developer out in Silicon Valley.

COX: It's easy to think that automation-related job losses are going to be limited to blue-collar jobs, but it's actually already not the case.

Physicians, that's an incredibly highly educated, highly paid job, and yet, you know, there are significant fractions of the medical profession that are just going to be done better by machines.

♪ ♪ WILLIAMS: That being the case, even if changes like this in the past ultimately benefited the present, how do we know the pace of change hasn't altered the equation?

So I'm really concerned about the time scale of all of this.

Human nature can't keep up with it.

Our laws, our legal system has difficulty catching up with it and our social systems, our culture has difficulty catching up with it, and if that happens, then at some point things are gonna break.

(spacecraft engines igniting) SINGER: So if you're talking about something like artificial intelligence, this is a technology like any other technology.

You're not going to uninvent it.

You're not going to stop it.

If you want to stop it, you're going to first have to stop science, capitalism, and war.

(loud blast) WILLIAMS: But even if A.I.

is a given, how we choose to use it is not.

LI: As technologists, as businesspeople, as policymakers, as lawmakers, we should be in the conversations about how do we avoid all the potential pitfalls.

EL KALIOUBY: We get to decide where this goes, right?

I think A.I.

has the potential to unite us, it can really transform people's lives in a way that wasn't possible before this kind of technology.

BOTVINIK: What we do with A.I.

is a decision that we all have to make.

This isn't a decision that's up to A.I.

researchers or big business or government.

It's a decision that we, as citizens of the world, have to work together to figure out.

WILLIAMS: Artificial intelligence may be one of humanity's most powerful inventions yet.

The challenge is are we going to be smart enough to use it?

DOMINGOS: It's like Carl Sagan said, right, you know, "History is a race between education and catastrophe."

The race keeps getting faster.

So far education seems to still be ahead, which means that if we let up, you know, catastrophe could come out ahead.

The stakes are incredibly high for getting this right.

If we do it well, we move into an era of almost incomprehensible good.

If we screw it up, we move into dystopia.

♪ ♪ WILLIAMS: Rewriting the code of life.

WOMAN: DNA is really just a chemical.

MAN: You can change every species to almost anything you want.

WILLIAMS: Can this save lives?

Or bring back extinct creatures?

WOMAN: Could we bring a mammoth back to life?

(animal trumpets) NARRATOR: It's a revolution in biology.

WOMAN: This is rapid, man-made evolution.

NARRATOR: "NOVA Wonders"-- "Can We Make Life?"

Next time.

(babies crying) ♪ ♪ "NOVA Wonders" is available on DVD.

To order, visit shop.PBS.org, or call 1-800-PLAY-PBS.

"NOVA Wonders" is also available for download on iTunes.

♪ ♪

Video has Closed Captions

Preview: S45 Ep104 | 28s | How does today’s artificial intelligence actually work—and is it truly intelligent? (28s)

Video has Closed Captions

Clip: S45 Ep104 | 2m 50s | Will robots take over the world? (2m 50s)

Video has Closed Captions

Clip: S45 Ep104 | 4m 46s | Rana el Kaliouby wants to humanize technology with artificial emotional intelligence. (4m 46s)

Using Artificial Intelligence to Diagnose Melanoma

Clip: S45 Ep104 | 2m 9s | A deep learning system has learned to accurately identify skin cancers in photographs. (2m 9s)

Video has Closed Captions

Clip: S45 Ep104 | 2m 14s | Neural nets have revolutionized the AI industry for years. But what exactly are they? (2m 14s)

Providing Support for PBS.org

Learn Moreabout PBS online sponsorship

- Science and Nature

Capturing the splendor of the natural world, from the African plains to the Antarctic ice.

Support for PBS provided by:

National corporate funding for NOVA Wonders is provided by Draper. Major funding for NOVA Wonders is provided by National Science Foundation, the Gordon and Betty Moore Foundation, the Alfred P....